It all began with some CloudLab machines I needed to use for "research". CloudLab only offers CentOS, Ubuntu or FreeBSD images to provision new machines from. I have been daily driving Arch Linux for 3 years, and very much enjoy its simplicity, so wondered if it would be possible to put Arch Linux on the CloudLab machines. I vaguely remember at one point I came across a tutorial to install NixOS from another running distro (turns out to be [this](https://nixos.org/manual/nixos/stable/#sec-installing-from-other-distro)), so it should also be doable for Arch.

*[research]: bullshit

The research was very boring and I didn't like it at all, but by digging down this rabbit hole I learned some new cool systems calls and got some more insight into Linux, which I am going to share here : )

:::success

This technique is also useful for VPSes. Some have a "custom ISO" feature where you can download any installer image, attach to your VPS, then boot from it, while others don't. Depending on your host of choice, it may or may not be possible to install your favourite distro in a regular way, but this nuclear option would always work as long as you have root access to any running Linux system.

:::

## Overview: the Typical Linux Installation Process

Those who have installed Arch Linux themselves should be very familiar with the procedures already, but for clarity I will go over it:

- Boot into an *installation media*, usually an ISO image burnt to a USB drive. The installation media will contain a minimal Linux system (usually the target distro) with utilities to help you install the target distro.

- Partition the disk to make room for the new system, and format new partitions. If you want encryption, or set up the Logical Volume Manager, do it here.

- Mount the filesystems that would be used for the new system, usually under `/mnt`. Make sure to mount sub-filesystems as well, e.g. `/boot/efi`.

- Bootstrap the new system's filesystems by creating the Linux file hierarchy and installing some basic packages. Arch Linux uses `pacstrap` (basically `pacman` with a chroot) and Debian uses `debootstrap`.

- Generate the filesystem table `/etc/fstab` for the newly installed system, then chroot into it to do some setup (e.g. locale, timezone and root password).

- Make sure that the kernel and initramfs are generated and placed correctly, and that the bootloader (usually grub) is installed and configured correctly.

We will largely be following the same flow, but cutting the last two steps in favor of `kexec`, for reasons we will cover [later](#Actual-Installation).

## Prepare Arch Linux Installer

We will need some space for the Arch Linux installer, whether it's unused disk space (which you can create by shrinking existing ext4 partitions) or RAM with *tmpfs*. In my case, I am using the latter.

:::success

If you are doing this in a memory-bound environment (e.g. low-spec VPS), use disk space.

:::

:::info

#### Have access to a different live-CD-based installer?

For one VPS I own, the host allows booting from a pre-selected array of installer images, but does not allow custom ones. They do provide [netboot.xyz](https://netboot.xyz/) but it needs abundant memory to work. In this case I used a similar procedure, replacing the running installer with the Arch Linux installer. As CDROM is mounted read-only, the Arch installer has to fit somewhere else, and main memory is insufficient for it.

I always use LVM for my VPSes, so my way around that was to save a few gigs of free space in the VG for a temporary LV where the installer will reside. Later after the installation process I can reclaim this space if I wish, by deleting the installer LV and expanding the system LV.

*[VPS]: Virtual Private Server

*[LVM]: Logical Volume Manager

*[VG]: Volume Group

*[LV]: Logical Volume

:::

A quick sanity check to make sure I have the necessary amount of RAM:

```

root@node0:~# free -g

total used free shared buff/cache available

Mem: 251 2 250 0 0 249

Swap: 7 0 7

```

We have more than enough! Good to proceed. Now we will be creating a tmpfs for an Arch Linux environment we are using for the installation process.

```

root@node-0:~# mount -t tmpfs -o size=50G tmpfs /mnt

root@node-0:~# df -h | grep /mnt

tmpfs 50G 0 50G 0% /mnt

```

With the new tmpfs, now it's time to populate it with the Arch Linux rootfs files. The latest bootstrap tarball can be downloaded from any mirror listed on the Arch Linux website.

```bash!

curl -O https://mirrors.edge.kernel.org/archlinux/iso/latest/archlinux-bootstrap-x86_64.tar.zst

tar --zstd -xvf archlinux-bootstrap-x86_64.tar.zst

```

Extracting the tarball should give us the folder `root.x86_64`. It contains a complete filesystem needed for an Arch Linux environment.

```!

root@node0:~# ls root.x86_64/

bin boot dev etc home lib lib64 mnt opt proc root run sbin srv sys tmp usr var version

```

We will copy everything in this folder to our tmpfs:

```bash

cp -r root.x86_64/* /mnt/

```

At this point, we should already be able to chroot into `/mnt` and see that we have `pacman` available.

```

root@node-0:~# chroot /mnt pacman -V

.--. Pacman v7.0.0 - libalpm v15.0.0

/ _.-' .-. .-. .-. Copyright (C) 2006-2024 Pacman Development Team

\ '-. '-' '-' '-' Copyright (C) 2002-2006 Judd Vinet

'--'

This program may be freely redistributed under

the terms of the GNU General Public License.

```

## Pivot Root

But before we actually jump into the chroot, we have to make sure to properly handle special filesystems:

- `/run` and `/run/lock`

- `/dev`, `/dev/pts` and `/dev/shm`

- `/proc`

- `/sys`

You should be familiar with these if you have had to repair your Linux installation manually. Usually when you try to recover from a non-booting situation, you would mount your normal filesystems, bind mount these special ones from the live CD environment to the root fs of your broken installation, then chroot.

```!

root@node-0:~# for fs in /run /dev /dev/shm /dev/pts /sys /proc; do mount -o bind $fs /mnt$fs; done

```

:::warning

#### Use the Move Operation

It is more recommended to do [`mount --move`](#Moving-Mounts) here instead of bind mounts, as we will later need to move some of these filesystems anyways.

In this case, the command would be

```bash!

for fs in /run /dev /sys /proc; do mount --move $fs /mnt$fs; done

```

Moving a mount would also move any child mounts within it, so we only need to move the top level ones.

This would fail without first making the mountpoints private. Read on for more details.

:::

Here instead of chroot-ing, we can use [`pivot_root`](https://elixir.bootlin.com/linux/v6.13.7/source/fs/namespace.c#L4338), a utility wrapping around the syscall of the same name. It changes the root mount, such that a specified directory **becomes** the new root, and the old root will be moved into a subdirectory in the new one. In the following command, we attempt to make `/mnt` the new root, and put the old Ubuntu root in `/mnt/mnt`, or the `/mnt` after pivoting.

```

root@node0:~# pivot_root /mnt /mnt/mnt

pivot_root: failed to change root from `/mnt' to `/mnt/mnt': Invalid argument

```

The command failed because of "Invalid argument". In the man page `pivot_root(2)`, `EINVAL` can indicate several different problems which we can check for. It turns out that in our case, this is the reason:

> **EINVAL** Either the mount point at ++new_root++, or the parent mount of that mount point, has propagation type **MS_SHARED**.

> [name=`man 2 pivot_root`] [color=#907bf7]

By "default", the root filesystem (and all mountpoints within it) is marked as "shared". This is because

> systemd(1) automatically remounts all mounts as MS_SHARED on system startup. Thus, on most modern systems, the default propagation type is in practice MS_SHARED.

> [name=`man 7 mount_namespaces`] [color=#907bf7]

We have to first mark it as "private". The `r` in `--make-rprivate` makes it recursive such that the new root also becomes a private mount:

```bash

mount --make-rprivate /

```

Now if we retry the `pivot_root` call, it will succeed, but we will not see any difference at surface level. This is because

> **pivot_root()** changes the root directory and the current working directory of each process or thread in the same mount namespace to ++new_root++ if they point to the old root directory. (See also NOTES.)

> On the other hand, **pivot_root()** does not change the caller's current working directory (unless it is on the old root directory), and thus it should be followed by a **chdir("/")** call.

> [name=`man 2 pivot_root`] [color=#907bf7]

So the calling process (in our case, bash) effectively has an inconsistent view of the filesystem, as it still believes we are working from within `/root`, but its CWD is now actually `/mnt/root`. We can fix this by doing an arbitrary `cd`.

Now to inspect the mount namespace:

```

root@node0:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 126G 0 126G 0% /dev

tmpfs 26G 1.6M 26G 1% /run

/dev/sda3 63G 3.3G 56G 6% /mnt

tmpfs 126G 0 126G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda1 256M 8.7M 248M 4% /mnt/boot/efi

tmpfs 26G 12K 26G 1% /mnt/run/user/<REDACTED>

tmpfs 50G 633M 50G 2% /

```

## Unmounting Old Root

Our `/` is now the tmpfs we previously created, and the Ubuntu root now resides in `/mnt`. It is now safe to unmount the old root...

```

root@node0:~# umount -R /mnt

umount: /mnt/run/user/<REDACTED>: target is busy.

```

... but no. I tried to figure out what was preventing the `umount`, but got no definitive answer. The best guess I have is [anonymous inodes](https://unix.stackexchange.com/questions/3109/how-do-i-find-out-which-processes-are-preventing-unmounting-of-a-device/386960#386960), as there are a lot of them. You cannot see whether these inodes are correlated with the particular filesystem you are trying to unmount though.

After all, there are still a bunch of running processes using resources from the old rootfs. We need to kill all of them to free the old rootfs up before we can unmount. But be careful! The SSH daemon, `sshd`, among these processes, is responsible for the session we are using to connect to the server. Killing `sshd` will lock ourselves out of the system, bringing unnecessary trouble. Thus, we will treat `sshd` a bit differently, *restarting* it instead of killing it.

Before that, we must initialize `pacman` to install `sshd` in the Arch installer.

Standard procedure to initialize the keyring:

```bash

pacman-key --init && pacman-key --populate archlinux

```

At this point I realized that no mirror is enabled for pacman, so pacman cannot synchronize with the package registry. Unfortunately the Arch bootstrap environment does not have an editor (none of vim, vi, emacs or nano). I ended up using a sed command to enable the first mirror:

```bash

sed -i "s/#Server/Server/" /etc/pacman.d/mirrorlist

```

:::info

Alternatively, use nano from the old Ubuntu rootfs (`/mnt/usr/bin/nano`) to edit the mirrorlist.

:::

Now we can install OpenSSH:

```bash

pacman -Sy openssh

```

I also installed neovim so that I can edit `/etc/ssh/sshd_config` more comfortably. It is highly recommended to install an editor anyway.

The mechanism we will use to restart `sshd` is to send it a `SIGHUP`.

> sshd rereads its configuration file when it receives a hangup signal, SIGHUP, by executing itself with the name and options it was started with, e.g. /usr/sbin/sshd

> [name=`man 8 sshd`] [color=#907bf7]

To make sure this would succeed, check how `sshd` was launched:

```

root@node0:/# ps -ax | grep sshd

1549 ? Ss 0:00 sshd: /usr/sbin/sshd -D [listener] 1 of 10-100 startups

*irrelevant entries omitted*

```

and double check that we now have `/usr/sbin/sshd`. Before we actually do this, edit the configuration file as necessary. I copied the host keys over from the old Ubuntu installation, so that I don't need to re-verify them when I later reconnect:

```bash

cp /mnt/etc/ssh/ssh_host_* /etc/ssh/

```

I also added my own public key to `/root/.ssh/authorized_keys`. Make sure the permissions are good! Finally,

```bash

killall -HUP sshd

```

to actually restart it. The connection will be dropped, and we now need to reconnect using the root account and the corresponding private key. Now as `sshd` is again safely running under the Arch environment, we can kill off the remaining processes that still use `/mnt`:

```bash

lsof -F p +f -- /mnt | sed 's/^p//g' | xargs kill

```

But we still cannot unmount `/mnt`:

```

[root@node0 ~]# umount -R /mnt

umount: /mnt/run: target is busy.

```

This is because even when we have attempted to kill all processes using anything under `/mnt`, there is one process we won't be able to kill: `init`, a.k.a. `systemd`, as it is designed to ignore `SIGTERM` and `SIGKILL`. Attempts to kill it will thus return normally but without any effect. We can confirm that `systemd` is the only process still keeping some special filesystems busy.

```

[root@node0 ~]# lsof /mnt/run/

COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME

systemd 1 root 56u FIFO 0,25 0t0 718 /mnt/run/dmeventd-server

systemd 1 root 58u FIFO 0,25 0t0 719 /mnt/run/dmeventd-client

```

### Moving Mounts

Although it is impossible to unmount these filesystems, they merely *reside* in `/mnt` and have nothing to do with the filesystem underneath `/mnt` itself. We only need to *move* them out of `/mnt` to unmount `/mnt`. Fortunately, there is a tool we can leverage:

```bash

mount --move /mnt/dev /dev

```

It does exactly what we want: move the mount without unmounting and remounting it. Do the same for `/run` and `/sys`, and `/mnt` should now be safe to unmount.

```bash

umount -R /mnt # should succeed!!

```

## Actual Installation

There are two ways to proceed from here. On one hand, we can wipe Ubuntu entirely, removing its partition or re-format it for Arch as we wish; On the other hand, we can install Arch parallel to Ubuntu.

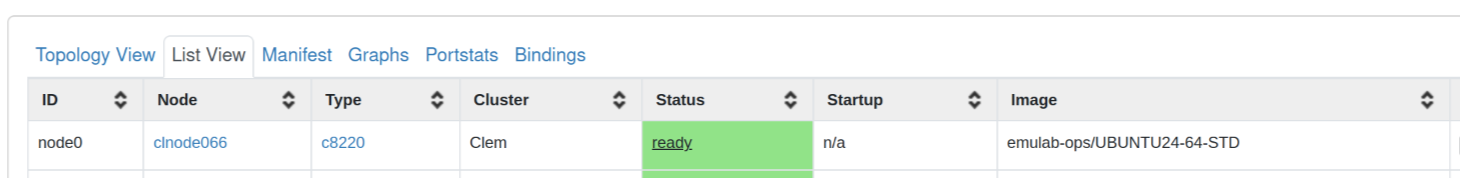

Normally, we would want the former, but CloudLab is a bit special. It embeds a few services of its own in the Ubuntu images. These services communicate to CloudLab's control plane and enables managing the machines from CloudLab's web UI. In particular, the "status" shown here is reported via such a mechanism. If we break it, CloudLab will think the host has failed and attempt to reboot it physically periodically. This effectively renders the host unusable.

Thus, I am keeping the Ubuntu installation intact and installing Arch in a separate partition. CloudLab machines do not use all available disk space for the OS, so there is plenty of free space available.

```

[root@node0 ~]# fdisk -l

Disk /dev/sdb: 931.51 GiB, 1000204886016 bytes, 1953525168 sectors

Disk model: ST91000640NS

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disk /dev/sda: 931.51 GiB, 1000204886016 bytes, 1953525168 sectors

Disk model: ST91000640NS

Units: sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes

Disklabel type: gpt

Disk identifier: 916BB3F3-230A-44F6-8AFC-B0EE55667823

Device Start End Sectors Size Type

/dev/sda1 2048 526335 524288 256M EFI System

/dev/sda2 526336 528383 2048 1M BIOS boot

/dev/sda3 528384 134746111 134217728 64G Linux filesystem

/dev/sda99 1936746496 1953523711 16777216 8G Linux swap

```

To lay out the plan, I am not touching Ubuntu's bootloader, so that Ubuntu would normally boot up each time the machine starts. CloudLab's healthcheck service will normally run, reporting the status properly. Luckily, CloudLab does not require heartbeats, so one single report is enough to make it happy.

But how are we going to use the new Arch installation then? We could use a chroot, but it does not give us the full power, as we cannot use our own kernel. Enter [`kexec`](#kexec), Linux's syscall that allows replacement of the running kernel without a reboot.

Here is the small snippet I use to automate the installation process, but it's basically just following the official [installation guide](https://wiki.archlinux.org/title/Installation_guide).

```bash!

# Create new partition next to the Ubuntu system partition

sys_drive=/dev/sda # change to yours if it differs

fdisk_output=$( (echo n; echo; echo; echo; echo w) | fdisk $sys_drive)

new_part="$sys_drive"$(echo $fdisk_output | grep -o -P "Created a new partition \K\d+")

# Format and mount the new partition

yes | mkfs.ext4 $new_part

mkdir /target

mount $new_part /target

# Install Arch Linux

echo nameserver 1.1.1.1 >> /etc/resolv.conf

pacstrap /target linux-lts grub base base-devel zsh tmux git dhcpcd openssh neovim

cp /root/.ssh/authorized_keys /target/root/.ssh/

# Enter chroot to do some setup

arch-chroot /target bash <<EOF

set -x

echo nameserver 1.1.1.1 >> /etc/resolv.conf

echo $HOSTNAME > /etc/hostname

systemctl enable dhcpcd sshd

useradd -m -s /usr/bin/zsh -G wheel saltyfish

sed -i "s/# %wheel ALL=(ALL:ALL) NOPASSWD/%wheel ALL=(ALL:ALL) NOPASSWD/" /etc/sudoers

mkdir /home/saltyfish/.ssh

chown -R saltyfish:saltyfish /home/saltyfish/.ssh

EOF

```

## `kexec`

Now we are finished with the (half-complete) installation. We can try booting into it. We will be using the `kexec` system call. There is a utility wrapping around it with the same name. In both Arch Linux and Ubuntu, install the package `kexec-tools` to have it.

To run a Linux kernel, you need at least three things: (1) the kernel itself, (2) the initramfs, and (3) kernel parameters. You will use `kexec -l` to *load* these before running `kexec -e`.

Back in the installer environment, the kernel we want to run is `/target/boot/vmlinuz-linux-lts` (because I installed `linux-lts`) and the initramfs is `/target/boot/initramfs-linux-lts.img`. These are easy to find and fill in, but the kernel parameters are a bit trickier. Let's start by looking at Ubuntu's parameters:

```!

[root@node0 ~]# cat /proc/cmdline

BOOT_IMAGE=/boot/vmlinuz-6.8.0-53-generic root=UUID=ccb0832d-c80e-430f-b248-d117889f1992 emulabcnet=34:17:eb:e5:59:d5 ro console=ttyS1,115200

```

- `BOOT_IMAGE`: Points to the kernel in use. [Presumably](https://unix.stackexchange.com/questions/248825/what-is-the-boot-image-parameter-in-proc-cmdline) there to enable certain userspace programs to identify the kernel. We should change it to `/boot/vmlinuz-linux-lts`, the kernel's location from the new installation's POV.

- `root`: Points to the partition containing the root filesystem. The initramfs will later `pivot_root` (or `switch_root`, see man page `pivot_root(2)` for details) to it to finalize the boot procedure. We should set it correspondingly.

- `emulabcnet`: Looks like CloudLab-specific stuff. Safe to drop.

- `ro`: Mount the initramfs read-only. The kernel's default is `rw`, but `mkinitcpio`

overrides it by explicitly setting `ro`. It does not matter much, so is safe to drop.

- `console=ttyS1,115200`: Enables serial console so that CloudLab's "console" feature works. Safe to drop, but better keep it as-is.

To find the correct root UUID, run

```bash

blkid -o value -s UUID $new_part

```

where `$new_part` is your partition containing the root filesystem. Attach the printed UUID to `UUID=` to make the value for the `root` parameter.

The final parameters to use for our new installation is thus `BOOT_IMAGE=/boot/vmlinuz-linux-lts root=UUID=<YOUR_UUID> console=ttyS1,115200`. It's time to put everything together:

```!

kexec -l /target/boot/vmlinuz-linux-lts --initrd=/target/boot/initramfs-linux-lts.img --command-line="BOOT_IMAGE=/boot/vmlinuz-linux-lts root=UUID=<YOUR_UUID> console=ttyS1,115200"

```

If this finishes successfully, we can now `kexec -e`. Your SSH session should freeze after you issue this command because the kernel is abruptly replaced, not giving `sshd` time to do anything to gracefully shutdown the connection. You can kill the `ssh` process or wait for it to timeout. It should not take long to boot up the new kernel, so you should shortly be able to login again. After you login, you can confirm you're on the Arch-packaged kernel using `uname -a`.

## Finale

From here, simply start enjoying the power of Arch. If you ever need to reboot, the machine will still reboot into Ubuntu, but you again can use `kexec` to switch over to Arch. (Attention: Will need to mount the Arch partition to find the kernel and initramfs files.)

It is, in theory, possible to port the necessary CloudLab component to Arch and create a systemd unit for it so we no longer need the Ubuntu, but I will leave it to Future Work(TM) to figure out how.

*[Future Work]: whatever the author is too lazy to study

## References

https://gist.github.com/m-ou-se/863ad01a0928e184b2b8