This year I decided to refactor my personal cloud infrastructure. Because of various nuances in my seemingly weird new setup, I have come across various difficulties. And yet, I managed to resolve all of them - at the cost of loss of sleep for several days. Here I will write about some of the takeaways and some new knowledge I learned along the way, in the hope that this could help some other self-host enthusiasts avoid having to go through the networking hell alone.

This article does not assume you have a lot of network background knowledge, and I will explain everything as detailed as I can. However, you should at least have some vague ideas about how computer networks work, and the ability to use search engines to do independent research.

Disclaimer

Everything here is based solely on my personal understanding of the matter. I do not guarantee absolute correctness of what I say in this article. If you spot any errors, please contact me to help me correct them. Thank you.

Recap

I don't think I have ever documented my old infrastructure, and I probably don't need to, because it was very simple, and very immature. But just for the sake of demonstrating what changes I am making to it, I should at least list some of the key ideas I used:

- Docker Compose was used to encapsulate all services I run.

- ZFS datasets were used to persist data.

- Because I was back in China where self-hosting was (and still is) basically forbidden, I had to use some sort of reverse proxying to expose my services to the public internet. The tool I used was

frp.

I won't make any changes to the Docker Compose part or the ZFS part because I am pretty satisfied with them. Yeah - I hear you questioning me: "how dare you call it cloud if it does not use Kubernetes?" I do have a K8s-centered plan. It is not in any sense completed yet, and I will write about it when it is largely done.

If you are experienced with computer networks, and you know what frp is, you will immediately notice that there were very obvious issues with this setup. frp is a transport layer reverse proxy. The way it works is:

- The

frpserver, orfrps, is run on a machine with a public IP address (say1.2.3.4), and listens on a port, say50000. - The

frpclient, orfrpc, is run on my home server with no public IP address, and connects tofrpsat1.2.3.4:50000. frpcregisters ports it wants to expose (say443) over the port50000channel.frpsreceives the requests and listens on1.2.3.4:443.- An external user initiates a connection to

1.2.3.4:443. frpsreceives the packet, reads the transport layer payload, and forwards that to the client over the port50000channel.frpcreceives the transport layer payload, and initiates a connection to127.0.0.1:443with the received payload.- All subsequent packets will be forwarded over the port

50000channel in a similar fashion.

Here a very obvious problem is that all traffic sent to my server was recognized as coming from 127.0.0.1, because it appeared as if frpc, running on the local machine, had initiated all the connections. I was young and illiterate about security at that time, so I thought as long as nobody notices the existence of my personal cloud, it is not a big issue to make the server unable to see the real source IP address of network connections. After all, who was going to attack me?

I was totally wrong. One day I noticed that my postmaster inbox started to see complaints about my server sending spam emails. I did not pay attention to it, thinking they were sent by mistake. I saw a lot of emails filtered out by rSpamd, but I did not pay attention to it either. I thought these were "incoming" spam emails. Then one day spam emails became so huge a problem that my server literally started to lag because all CPUs have been working on identifying spam emails. I could no longer live with that, so I investigated what happened. It was one of the things you never wish to happen to you: my mail server had been operating as an open relay, and motherfuckers spammers soon noticed that.

The reason was because I was using Mailu to deploy my mail server. Mailu's default Postfix configuration "trusts" local IP addresses as impossible to be spammers. They do this for a reason, but in my setup it causes trouble, because all emails appear as coming from the local host. I was stupid and lazy back then, so rather than fixing the issue, I chose to work around it by making SMTP an exception to the reverse proxying. Details about this workaround is out of scope for this article.

As you see, using frp is not the best option. Thus, the major goal of my refactor is to enable my home server to see the real source IP addresses of clients.

WireGuard: Quest for Real IP

I then decided to fix this critical issue. Since I moved to the US, I now have a public IP address and no longer need an awkward reverse proxying setup. However, there is a bad news: outbound traffic to port 25 is blocked. This is a reasonable anti-abuse practice, but it also brings legitimate users (like me!) some trouble. Another issue is privacy concerns: I do not want to expose to the public my home IP address. Thus, I planned to ditch frp but still utilize some sort of reverse proxying, just to bypass the ISP restrictions and hide my home IP.

Note that certain VPS providers also block outbound SMTP. I won't recommend specific service providers, but there are service providers who trust their users and do not do so by default. Please do me a favor by not abusing these angelic service providers.

As transport layer proxies do not preserve source IP information, we need to go a bit deeper into the network stack to the network layer, because this is where the IP headers reside. VPN is a great tool to help us achieve network layer reverse proxying. However, traditional VPNs (L2TP, IPSec, etc.) are IMHO very convoluted and difficult to set up securely and correctly. Thus, I decided to settle on WireGuard, a relatively new VPN protocol.

Linus Torvalds loved WireGuard so much that he merged it into the Linux kernel, so WireGuard is relatively easy to set up. One caveat though, is that if your VPS is virtualized using OpenVZ, it tends to have an older version of the kernel that does not include WireGuard. In this case, you can use BoringTun by Cloudflare. It is a userspace implementation of WireGuard, removing the need to interop with the kernel, thus reducing the level of privileges required.

Characters

Before we dive into the technical details of my new setup, let's make clear what I have:

- Gateway refers to my VPS providing the mask IP address and forwarding outbound SMTP traffic.

- Server refers to my home server hosting all the private cloud services I use on a daily basis.

Setting up WireGuard

The first step is to establish a channel between Gateway and Server, by setting up WireGuard. Let's start from the simplest configuration. Generate the keypair using

$ wg genkey | tee private.key | wg pubkey | tee public.keyAnd optionally, use

$ wg genpskto generate a pre-shared key. Then, we create the configuration file /etc/wireguard/wg0.conf on Gateway:

[Interface]

PrivateKey = <Gateway private key>

Address = 192.168.160.1

ListenPort = 51820

[Peer]

PublicKey = <Server public key>

PresharedKey = <PSK>

AllowedIPs = 192.168.160.2/32and on Server:

[Interface]

PrivateKey = <Server private key>

Address = 192.168.160.2

[Peer]

PublicKey = <Gateway public key>

PresharedKey = <PSK>

AllowedIPs = 0.0.0.0/0

Endpoint = <Gateway public IP>:51820

PersistentKeepalive = 60The config item PersistentKeepalive is used so that WireGuard keeps the connection active, since the underlying connection is one-way although the tunnel enables two-way communication. This is because Gateway has a public IP address while Server does not (or at least we do not intend to use it). If we do not enable the keepalive feature and the connection is somehow interrupted, Gateway can no longer reach Server until Server contacts it again.

Another interesting item is AllowedIPs. WireGuard automaticlly adds a route to wg0 for these IPs when the interface is brought up, and it only allows packets with these destination IPs to be routed over the tunnel. Intuitively, these are the IP addresses we are allowed to reach via the tunnel.

After we have created the configuration files, we can use wg-quick up wg0 to bring the WireGuard interfaces up.

If using BoringTun, add these environment variables:WG_QUICK_USERSPACE_IMPLEMENTATION=boringtun-cli WG_SUDO=1. I added them to/etc/environment, as well as thesystemdoverride file for the servicewg-quick@wg0such that I can usesystemdto manage the WireGuard interface. Note that TUN/TAP must be enabled. Check that bystat /dev/net/tun. If TUN/TAP is not enabled, you will get "No such file or directory".

First Encounter with Routing

It seems that WireGuard is up and running now, and you can confirm this by pinging 192.168.160.1 from Server (or pinging Server from Gateway). However, pinging other addresses will give you a 100% packet loss. Let's try to understand why:

server$ ip route get 1.1.1.1

1.1.1.1 dev wg0 table 51820 src 192.168.160.2 uid 1000

cacheThe ip route get command takes an IP address and returns the routing decision for packets with this particular destination IP address. Here, it tells us: packets sent to 1.1.1.1 will be routed through the interface wg0, due to looking up the routing table with number 51820, and they will be assigned the source IP address 192.168.160.2.

In Linux, routing is done by looking up routing tables, which you can view using the ip route show <table> command. There are some default tables (local used for local loopback traffic, main the main routing table and default as the ultimate fallback option). However, routing tables are not consulted until the router sees a rule telling it to do so. Rules (some people might prefer to call them routing policies) can be viewed with the command ip rule. Let's try to understand where the routing decision for 1.1.1.1 came from:

server$ ip rule

0: from all lookup local

32764: from all lookup main suppress_prefixlength 0

32765: not from all fwmark 0xca6c lookup 51820

32766: from all lookup main

32767: from all lookup defaultThe rule with preference 32765 dictates that all packets without the firewall mark 0xca6c should consult the routing table numbered 51820. This rule was created by WireGuard when we used wg-quick to bring up our wg0 interface. We will skip over fwmark for now. The short explanation is that this rule tries to exclude packets sent by WireGuard itself. Thus, the meaning of this rule translates to: "all traffic that was not sent by WireGuard should be routed to WireGuard".

server$ ip route show table 51820

default dev wg0 scope linkForwarding

While this looks intuitive, it actually will not work! There are two things missing here. First, we need to make sure that forwarding is enabled on Gateway. Forwarding means relaying packets that are not directly related to the local host. In our case, Gateway needs to do forwarding to connect Server with other hosts on the internet. This means Gateway will receive packets with neither the source IP nor the destination IP matching its own, and it needs to forward these packets between Server and whichever host Server is communicating with.

By default, the Linux kernel might not allow forwarding packets for other hosts, because not all machines need to act as routers. Allowing forwarding would add some security risks, so it's better disabled if not used. However, in our setup, since Gateway is a gateway which forwards traffic for Server, it does need to allow forwarding.

Whether forwarding is allowed is controlled by the sysctl variable net.ipv4.ip_forward. It needs to be set to 1. We can use the command

gateway# sysctl -n net.ipv4.ip_forwardto check the current value, and use

gateway# sysctl -w net.ipv4.ip_forward=1to set it to 1, if not already. However, our changes will be lost once the machine reboots. To persist them, we need to edit the configuration file /etc/sysctl.conf. I prefer to add an override in /etc/sysctl.d/. We will create a new file 10-forwarding.conf and write net.ipv4.ip_forward = 1 in it, and use sysctl -p to load the config file so that our changes take effect immediately.

Security

Keep in mind that allowing forwarding bears some security risks. If we forward everything we receive, there is a chance that malicious hackers could use our Gateway to proxy their traffic when attacking other servers! We have to set up some finer control over what could be forwarded and what should be dropped.

We will first let Netfilter filter all packets that need to be forwarded, by setting the default policy of the FORWARD chain in the filter table to DROP (or REJECT):

gateway# iptables -P FORWARD DROP Remember that iptables rules are not persistent, so you have to come up with a way to apply it automatically on each boot. The easiest way is to add the command to /etc/rc.local (I am using Debian, YMMV) but you might want to use more advanced tools like iptables-persistent. Since my Gateway does not do anything other than forwarding traffic for Server, I just went with the simplest solution.

This would again render our Gateway incapable of doing any forwarding, but we do need to enable forwarding both to and from wg0. We can use these two commands to achieve this effect:

gateway# iptables -A FORWARD -i wg0 -j ACCEPT

gateway# iptables -A FORWARD -o wg0 -j ACCEPTThese rules say that all packets that come from (-i) or go to (-o) the wg0 interface should be allowed to pass. Some tutorials on the internet would ask you to do

gateway# iptables -A FORWARD -i wg0 -m conntrack --ctstate NEW -j ACCEPT

gateway# iptables -A FORWARD -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPTThis is favorable if you do not need to accept inbound connections. In this case, you would disregard the -o wg0 rule and use this instead. Later we will cover conntrack in more detail when we talk about DNAT. Here is a brief explanation: It says that all packets that belong to an already established connection, or are related one, should be allowed to pass. If this rule is not present, although Server will be able to access internet hosts through Gateway, all response packets would be dropped. This rule, while allowing responses to be delivered, does not allow establishing incoming connections from the internet. However, since I do want to allow incoming connections, I allowed all traffic targeted towards wg0.

You might want to have finer control over what ports are open, etc. This is out of scope for this post, but yes, you can do it here by using the conntrack rule in conjunction with some other rules that open up specific ports.

SNAT

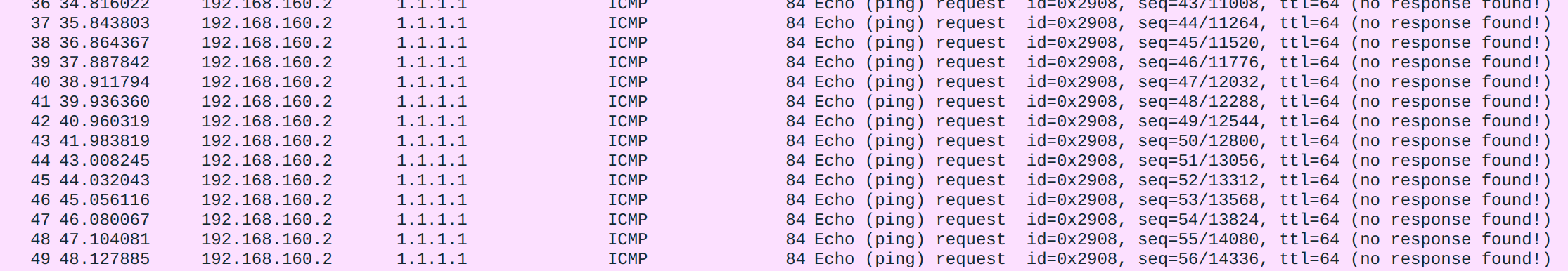

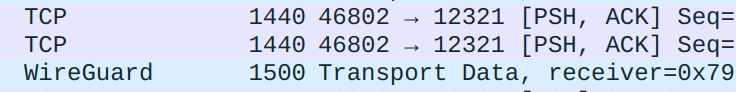

However, you should notice that you still cannot access WAN IPs over the tunnel. Let's inspect the packets to determine the reason. I used tcpdump on both Server and Gateway to capture traffic on wg0. Here is the command:

# tcpdump -i wg0 -w output.pcapAnd here is what we'll see on both hosts: (I use Wireshark to view pcap files)

The reason why our ping failed is obvious here: our ICMP packet successfully reached Gateway, however no response was received from 1.1.1.1. Of course the reply packet won't arrive back at Gateway - it doesn't know how! The only way a host can know how to reach back to another host that contacted it was to read the source IP address from the IP header, and in this case it is 192.168.160.2, a private IPv4 address. As a result, 1.1.1.1 will think 192.168.160.2 just pinged it, and send any reply to 192.168.160.2.

What we want instead is that reply packets get sent to Gateway first, and forwarded back to Server by Gateway. The only way to tell another host to reply to Gateway is to inform it of Gateway's public IP address, so we will have to rewrite the packets' IP headers to replace the source IP addresses. Not only do we have to replace the IP addresses, but we also need to keep track of the connection info and identify the reply packets, such that we can forward them back to Server. That sounds like a lot of work, but it's such a common scenario that the Linux kernel provides a specialized tool to help accomplish it: SNAT, or Source Network Address Translation.

Using SNAT is as simple as adding a rule in the POSTROUTING chain in the nat table in Netfilter. To help illustrate the flow of network packets, here is an image showing the structure of Linux's network stack, taken from Wikipedia. For now we will only focus on the IP layer (i.e. the green section).

SNAT happens in the postrouting phase, where the routing decision for the outgoing packet has already been made. Let's go through an example scenario where our public IP is 2.2.2.2:

- Server at

192.168.160.2wants to send a packet to1.1.1.1. - The routing table says the packet should be sent over WireGuard, through Gateway, or

192.168.160.1. - Server sends the packet with source IP

192.168.160.2and destination IP1.1.1.1to192.168.160.1. - Gateway receives the packet and forwards it to

1.1.1.1, because the destination IP is not itself. - Just before the packet leaves, an SNAT rule in the

POSTROUTINGchain is matched, so Netfilter rewrites the source IP to2.2.2.2. The destination IP is unchanged, still1.1.1.1. 1.1.1.1receives a packet from2.2.2.2and replies to this address.- Gateway at

2.2.2.2receives a reply and magically(TM) knows that this connection has undergone SNAT, so the reply should be forwarded to192.168.160.2. - Gateway rewrites the destination IP address to

192.168.160.2and forwards the packet. Source IP is unchanged, still2.2.2.2. This technically is a DNAT (we will cover DNAT later) that automatically comes with the SNAT rule. We will not see this rule, but this is what Linux does under the hood to make SNAT work. - Server receives the response with source IP

2.2.2.2and destination IP192.168.160.2.

For the magic part, SNAT relies on conntrack (it's you again!), Linux's connection tracking module, to look up the correct destination addresses for incoming reply packets.

Enough talk. Let's get some hands-on with SNAT. This command will do the work for us:

gateway# iptables -t nat -A POSTROUTING -o venet0 -j SNAT --to-source 2.2.2.2My Gateway is an OpenVZ VPS, so the network interface isvenet0. Yours might be different. You can get your physical link interface name using the commandip aand look for your public IP address. The device to which your public IP address is attached should be put in this command.

The output interface here must be specified, or packets sent through wg0 will also be SNAT-ed, which leads to unwanted results we will explain later.

Writing SNAT rules is a little bit tedious since we have to manually specify the new source IP. Netfilter provides a special target called MASQUERADE to eliminate the need for this step. We can use this command instead:

gateway# iptables -t nat -A POSTROUTING -o venet0 -j MASQUERADEIf we use MASQUERADE, Netfilter will automatically determine the right source IP to use. Recall that SNAT happens on the POSTROUTING chain, when the routing decision has been made, so at this point Netfilter already knows the interface to use, and it will just grab the IP address attached to this interface. Most of the time, this logic fits our need, so we can just use MASQUERADE whenever we want to do a "normal" SNAT.

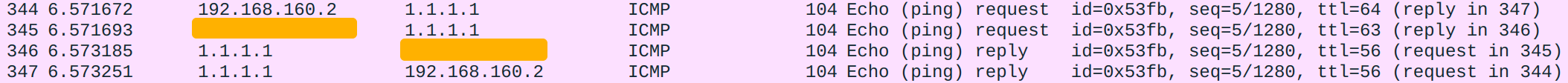

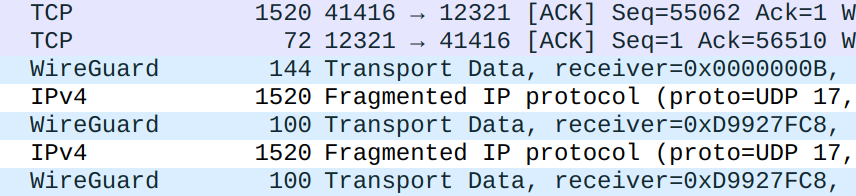

You should notice that now you can successfully ping external hosts through WireGuard. A packet capture at this point on Gateway might help you understand why things started to work:

The orange marks are my public IP address. This result corresponds to our theoretical packet flow perfectly. Now take a small break - we have the basic WireGuard tunnel up and running!

Selective Proxying: Advanced Routing Configuration

In our currrent setup, all outbound traffic will be forwarded through our WireGuard tunnel, but this is not what I want. I only want outbound SMTP traffic to be tunneled.

If we want finer control over routing, we have to prevent WireGuard from creating the default routing rules. WireGuard offers the option to create its rules in a different routing table, such that you can define your own policy that dictates when to use the tunnel as an outbound proxy. To use this feature, we first add this line in wg0.conf, Section Interface:

Table = wireguardRemember to bring the wg0 interface down and up again after editing the config file.Inside the Linux kernel, routing tables are represented with a numeric ID, however here we are using an English name to refer to a routing table. For this alias to resolve successfully, we have to define it first, in the file /etc/iproute2/rt_tables. The default content of this file should be

#

# reserved values

#

255 local

254 main

253 default

0 unspec

#

# local

#

#1 inr.ruhepWe can see 4 routing tables defined (technically 3, because unspec means "all", and is not a real routing table). We have talked about them before. We will here define our own, by adding a line:

25 wireguardThe number is arbitrarily chosen. I use 25 because it is the port for SMTP. After we have defined this routing table alias and edited wg0.conf, we can restart the wg0 interface. This time, if you check your public IP address by accessing an IP-echoing website (I use curl icanhazip.com), you will see Server's real upstream IP address instead of Gateway's. Now our outbound traffic no longer goes through WireGuard. Let's print the routing rule again:

server$ ip rule

0: from all lookup local

32766: from all lookup main

32767: from all lookup default

server$ ip route show table wireguard

default dev wg0 scope linkNow, all WireGuard-related rules are gone. However, the routing table wireguard has been populated with a rule to direct traffic to the wg0 interface. We can configure our routing policy to direct packets to this routing table if we want to route them via WireGuard.

Specifically, we want to route outgoing packets with TCP destination port 25 through the tunnel. The corresponding policy is:

server# ip rule add ipproto tcp dport 25 lookup wireguard pref 2500pref is the preference level of this rule. The smaller the value, the earlier this policy is applied. We just need to specify a value smaller than 32766. Here I picked 2500.

We can test the effect of this rule by trying to connect to Google's SMTP server:

server$ nc smtp.gmail.com 25

220 smtp.gmail.com ESMTP t65-20020a814644000000b005569567aac1sm5144152ywa.106 - gsmtpGreat! The connection is successfully established. However, this setup will eventually break even if it works at first glance. The problem is that since we removed the default routing policies, Server no longer knows how to correctly reach Gateway. It will eventually try to reach 192.168.160.1 through the physical NIC, which of course will not work. However, routing decisions are cached, so the first few packets will hit the cache and get routed correctly. To correct this situation, we have to tell Server that all traffic to 192.168.160.0/24 should be routed through wg0:

server# ip route add 192.168.160.0/24 dev wg0Tip: To flush the cache, run ip route flush cache. This way you will immediately see that routing over the WireGuard tunnel no longer works.There is one more caveat. An email server does not only connect to port 25 of other SMTP servers, but also listens on port 25 itself. This simple routing policy will make connections from other email servers to us fail, because they will be directed to the wireguard routing table and routed away from us. Thus, we need to be more specific and only route SMTP traffic that actually come from within us. For example, I limited the policy to packets from the interface br-mailcow (yes I use Mailcow):

server# ip rule add ipproto tcp dport 25 iif br-mailcow lookup wireguard pref 2500IPv6

However, when we use openssl to test, the connection fails.

server$ openssl s_client -connect smtp.google.com:25 -starttls smtp

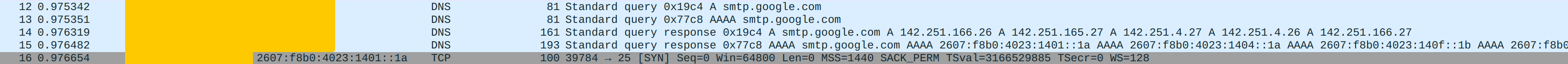

(no output)Again, I did some packet capturing and found this:

openssl attempted an IPv6 connection, which did not get routed over our WireGuard tunnel because we did not configure IPv6 at all. nc did not connect over IPv6 because it is not supported...

We can choose to disable IPv6 and go with IPv4 only, but here I'll configure IPv6 as well. To enable IPv6, we just need to assign IPv6 addresses to both endpoints. We will be using the local IPv6 addresses fc00::/7 for routing inside our WireGuard network. For example, if we use the subnet fc00:0:0:160::/64, this can be the new Address config on Server:

Address = 192.168.160.2, fc00:0:0:160::2Don't forget to update AllowedIPs in the Peer section! On Server, we add ::0/0 to allow routing all IPv6 addresses, just like what we did for IPv4. On Gateway, we add Server's IP /128.

Apart from this, everything we did for IPv4 needs to be done again for IPv6, because they are handled completely separately. First, to enable forwarding for IPv6, se use the sysctl config item net.ipv6.conf.all.forwarding = 1. We also want to set the default policy for the FORWARD chain to DROP for IPv6 using ip6tables, and allow forwarding to / from Server:

gateway# ip6tables -A FORWARD -i wg0 -j ACCEPT

gateway# ip6tables -A FORWARD -o wg0 -j ACCEPTNext, we need to configure routing for IPv6 as well. Don't forget to add the port 25 routing policy to the IPv6 policy list.

server# ip -6 route add fc00:0:0:160::/64 dev wg0

server# ip -6 rule add ipproto tcp dport 25 lookup wireguard pref 2500Next, we need to add the SNAT configuration, a.k.a. the MASQUERADE rule on Gateway:

gateway# ip6tables -t nat -A POSTROUTING -o venet0 -j MASQUERADENow we can reinitialize the interfaces on both ends and test it out:

server$ ping6 fc00:0:0:160::1

PING fc00:0:0:160::1(fc00:0:0:160::1) 56 data bytes

64 bytes from fc00:0:0:160::1: icmp_seq=1 ttl=64 time=61.5 msAnd now openssl should be able to connect:

server$ openssl s_client -connect smtp.google.com:25 -starttls smtp

CONNECTED(00000003)

depth=2 C = US, O = Google Trust Services LLC, CN = GTS Root R1

verify return:1

...The Mismatching MTU: a Mysterious Issue with Docker

Now that outbound SMTP is routed through WireGuard normally, it's high time that we test the more real scenario: connecting from Docker containers. Let's bring up a simple Arch Linux container (because I love Arch so much) and install openssl.

server# docker run -it archlinux:latest bash

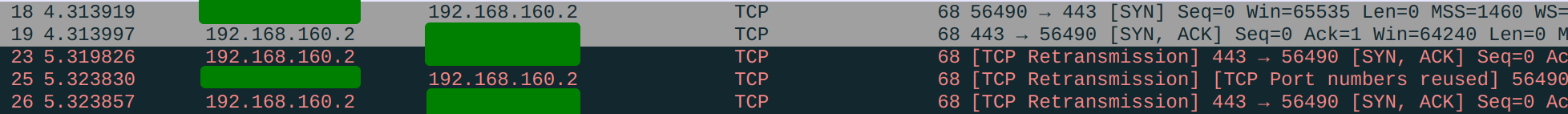

container# pacman -Sy opensslI did the testing with several popular email providers. Gmail works fine, but we have some trouble with iCloud. Sometimes it works just fine, but sometimes this happens:

container# openssl s_client -connect mx02.mail.icloud.com:25 -starttls smtp

CONNECTED(00000003)

*no further output*We are stuck with no output, i.e. the TLS handshake does not finish. However, this problem never happens if we directly initiate the connection on Server, outside Docker containers.

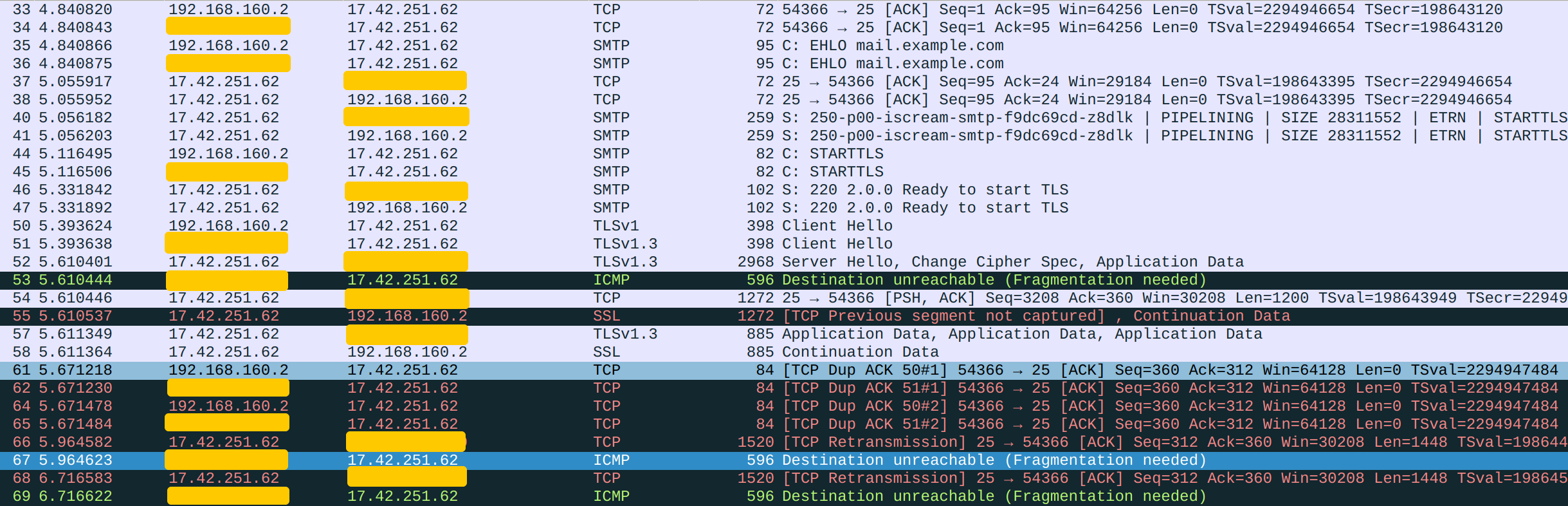

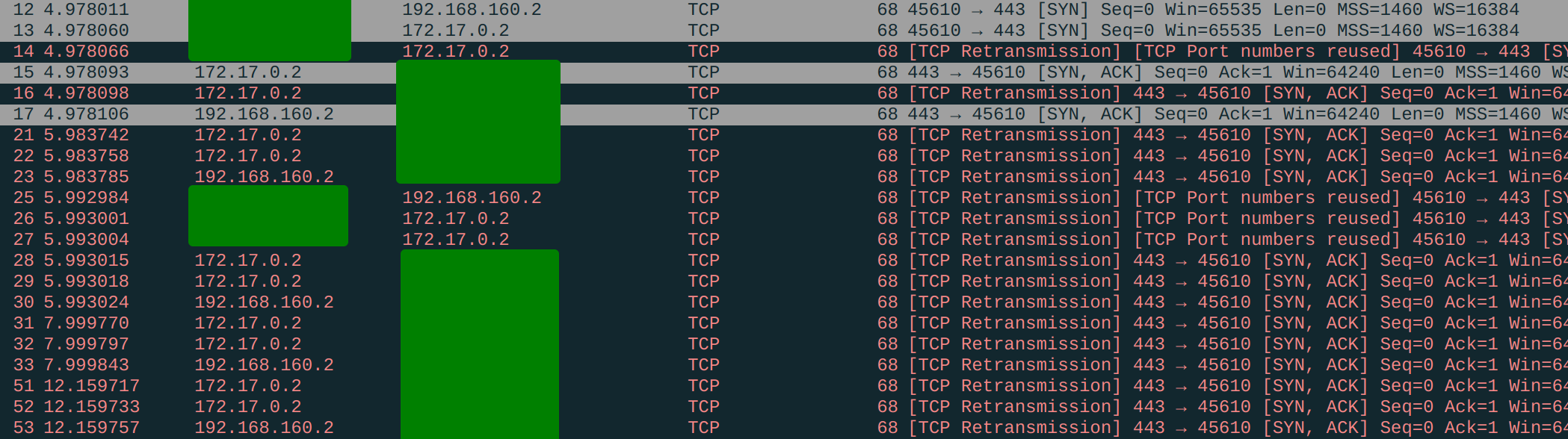

Let's again invite our favorite tcpdump and Wireshark to investigate the issue for us. This is what we get on Server:

172.17.0.2is the container's IP assigned by Docker.17.42.251.62is Apple's SMTP server.

The initial SMTP communication is fine, but after we send the Client Hello, we never get a Server Hello back. We get some broken packets, but they do not form a valid Server Hello, so the TLS handshake is stalled. We need to capture some packets on Gateway to investigate further:

Redacted is Gateway's public IP.

Here is the story we can read from the packet log. Everything before the TLS handshake were fine. Server sent Apple a Client Hello, and Apple replied with a Server Hello. However, rather than forwarding the Server Hello along, Gateway chose to reject it with an ICMP message Destination unreachable (Fragmentation needed). This ICMP control message means that the packet sent was too large for the recipient to handle. Thus, Gateway is saying: "I cannot process this packet. Please break it down into smaller fragments". Gateway expected that Apple would then resend the same content with several smaller packets, and it indeed did. This process actually has a name: Path MTU Discovery.

Before I explain further, I shall mention some background knowledge. The link layer imposes a restriction: that all packets transmitted must fit within a certain size, called the MTU, or Maximum Transmission Unit. If an interface receives a packet larger than this size, it will break this packet down into smaller ones, and instruct the recipient to later reconstruct the packet before presenting it to higher layers.

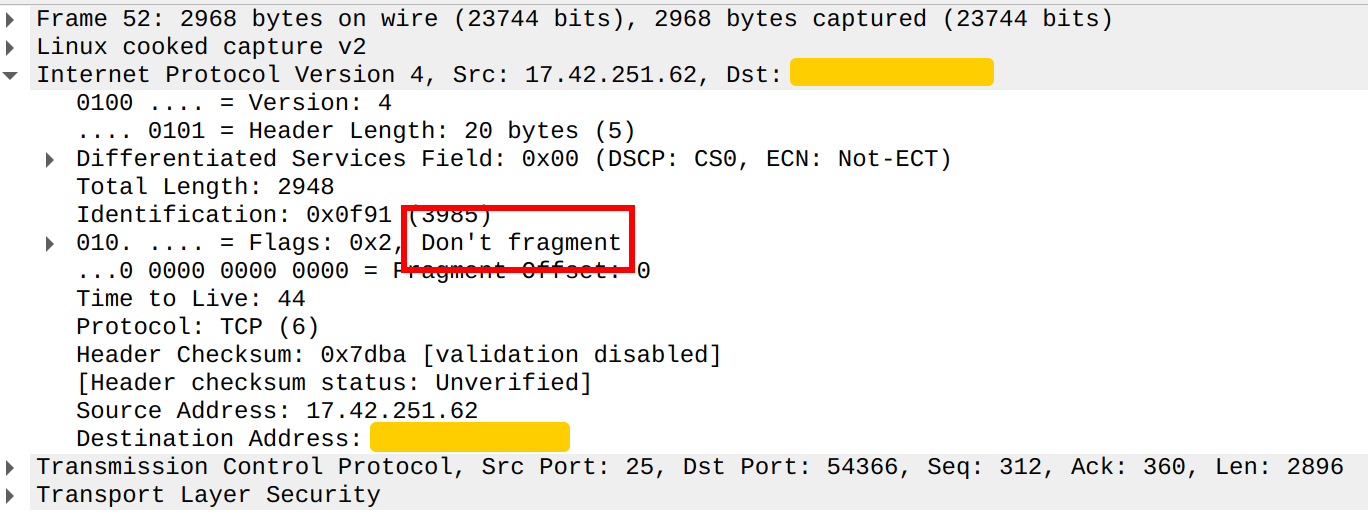

However, Apple's Server Hello packet comes with a special IP flag "Don't fragment" (DF):

This explicitly tells all routers that this packet should never be fragmented. If any router wants to fragment the packet, it should abort and reply to the server an ICMP "Fragmentation needed" message. Thus, this is what Gateway did.

The real story is even more complicated than this. On top of the network layer (IP), there is a transport layer (TCP) before we reach the application layer (SMTPS). TCP also provides a similar functionality, called segmentation. TLS Server Hello messages are inherently large because the server's certificate will be sent there. The size of a certificate is usually far beyond common MTU values, so some sort of fragmentation must happen. I have never been able to understand why a DF flag is always set on TLS packets, but it seems that some people believe path MTU discovery is good for performance. Rather than letting IP fragmentation happen, people seem to prefer either PMTUD or TCP segmentation, because TCP segmentation can utilize TCP Segmentation Offloading, a hardware feature present on most modern network adapters.

I am not in any sense sure about things I said in this paragraph, as I have not been able to find any solid reference material about this. Please enlighten me if you do.

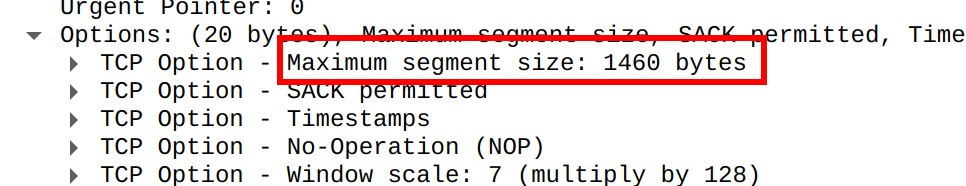

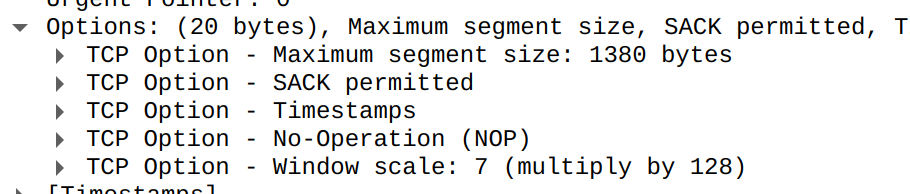

If a TCP/IP packet is too large to be transmitted, before dropping it, a router would first try segmenting it on the TCP level. The segmentation size that will be used if TCP segmentation was to happen will be determined by subtracting the IP and TCP headers' size from the MTU. This value is called the Maximum Segment Size (MSS) and communicated in the TCP handshake. We can actually read it from our packet log. If we inspect the TCP SYN packet, this value can be found in the Options section:

As we see, the MSS value used was 1460. This value originated from whomever initiated the TCP session. It took the MTU of the outbound interface used and subtracted 40, 20 of which is for the IP header and 20 for the TCP header. Note that TCP headers can get up to 60 bytes long, but in reality they are almost always 20 bytes because the rest 40 bytes are reserved for options, which are not used outside the handshake.

I am again unsure about how the two fallback features (PMTUD and TCP segmentation) work together, but my theory is:

- Server sends TCP SYN with MSS equals 1460, via Gateway.

- Apple attempts PMTUD by sending a 2498B Server Hello (Packet #52) with the DF tag.

- Gateway receives the packet and finds its size larger than

wg0's MTU. - Gateway attempts IP fragmentation but aborts because of the DF tag.

- Gateway attempts TCP segmentation by dividing the packet into 1500B TCP segments. That is, 1460B (MSS) plus the headers' size 40B.

- Gateway notices that 1500B still exceeds

wg0's MTU. - The packet is discarded and an ICMP Fragmentation needed (Packet #53) is sent back to Apple.

- Apple attempts TCP segmentation on its end, by resending the Server Hello in several segments. This corresponds to Packet #55, #66 and #68 we see in the log. Actually packet #68's TCP payload is the same as #52, the initial Server Hello. The length of #55, #66 and #68's TCP payload add up to that of #52.

- Gateway receives the 1500B segments and repeats step 3 through 7.

- Apple repeats step 8, and Gateway repeats 3-7, and so on and so forth. The TLS handshake never finishes.

Wireshark shows 1520B and 2968B as the packets' size. This is because the link layer header (20 Bytes long) is included. However, MTU as a link layer concept does not include the link layer header.

Knowing the reason behind the failure, we can proceed to fixing the issue. Let's check the current value of wg0's MTU:

gateway$ ip l

(other interfaces)

9: wg0: <POINTOPOINT,MULTICAST,NOARP,UP,LOWER_UP> mtu 1420 qdisc mq state UNKNOWN mode DEFAULT group default qlen 500

link/noneAha! The default MTU set by WireGuard is 1420, smaller than 1500 implied by the MSS value 1460. But why would such a mismatch exist? Recall that MSS was set according to the MTU of the first network interface the SYN will go through, so if we connect directly from Server, it will be set to 1380 because the first network interface is wg0 with MTU 1420, and we can actually verify this:

server$ ip l

(other interfaces)

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:51:32:04:c7 brd ff:ff:ff:ff:ff:ff

4: wg0: <POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1420 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/none

However, when we connect from a Docker container with bridged network, the first network interface a packet encounters will be Docker's bridge instead! And this bridge has a different (higher) MTU 1500. This explains why the MSS would have been set to 1460, causing the response not to go through wg0.

Interestingly, Gmail takes a different approach. Its Server Hello does not have the DF tag set, so Gateway uses IP fragmentation to break the packet down, and TCP segmentation is not involved. Thus, the mismatch does not affect connections made with Gmail.

Now that we see where the problem came from, there several approaches to resolve it. We can just need to make Gateway wg0's MTU match Server docker0's, by setting MTU = 1500 in wg0.conf, Section Interface. But is this our best option? If you actually go with it, you will notice that your network speed is severely negatively affected! According to my iperf3 test, if I connect directly over the internet, the speed between Server and Gateway can reach up to 70Mbps, and has an average performance of 50Mbps. However, the WireGuard tunnel works only at about 20Mbps!

Let's take a small step back because there is one question we didn't quite address: Why does WireGuard set the default MTU to 1420 while most modern OSes default to 1500? There is actually a pretty good reason. WireGuard tunnels network layer traffic, but works on the transport layer (UDP) itself. Each packet WireGuard tunnels is a complete IP packet, and WireGuard itself has some overhead. Specifically, WireGuard adds its own header, a 8-byte UDP header and a 20-byte IPv4 header to every IP packet it tunnels. If IPv6 is used, the IP header gets 20 bytes larger. This makes the packet size grow by up to 80 bytes - exactly the difference between the default MTU of physical interfaces and WireGuard's interfaces. We can verify this from a packet log captured during an iperf3 speed test:

I used IPv4 for the test, so the difference is only 60 bytes.

The reason becomes clear. If WireGuard uses a standard MTU, under heavy load, all packets sent through the tunnel will need to be fragmented because eventually WireGuard needs to send the packets plus their additional headers over the physical tunnel. After adding the overhead bytes, packets get up to 1500+80 bytes long while the physical interface only allows packets within 1500 bytes to pass. The fragmentation here is extremely inefficient because the second fragment will always be only 60 or 80 bytes long:

A 1500B (shown as 1520B because, again, the 20B Ethernet header is included) packet becomes 1540B with the WireGuard overhead (without the IP header). The first IP fragment can only be 1500B long, of which 20B is the IP header, so only 1480B of the packet will be delivered. The remaining 60B will be sent in the second fragment. Taking into consideration the 20B IP header, the second fragment is 80B long, exactly matching our observation of 100B Ethernet packets.

However, if the WireGuard interface has an MTU of 1420, wg0 will deal with significantly less oversized packets because the TCP MSS will (hopefully) be set to 1380. This way, wrapped WireGuard packets will never exceed 1500B, so never need to be fragmented.

Thus, it is not a good idea to increase WireGuard's default MTU. The better solution is to resolve the mismatch between the TCP MSS and our Path MTU (the smallest MTU along the network path), rather than making MTUs agree. Luckily, iptables provides an interface for us to tamper with MSS: the target TCPMSS. Here is the most common usage:

server# iptables -t mangle -A POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtuOpenWrt seems to use--tcp-flags SYN,RST SYN. I do not understand whyRSTneeds to be taken into consideration here. Please explain to me if you know why.

The TCPMSS target can set a specific MSS, but just like SNAT, we most of the time don't want to bother looking up the correct value, and just want Netfilter to be "smart". Thus, there comes --clamp-mss-to-pmtu that does exactly what we want: setting the MSS according to the PMTU. --tcp-flags SYN SYN means that the SYN flag should be set for a packet to match this rule. This is because the MSS is only negotiated once at the very beginning of the handshake, hence we only need to modify it once.

Cheers! We have successfully set up our WireGuard tunnel and configured outbound SMTP traffic to go through it.

Persisting Our Changes

To summarize, we made these changes to the routing table and firewall:

- We added a routing rule in the default routing table:

192.168.160.0/24 dev wg0and did the same for IPv6. - We added routing policies to Server to route all outbound SMTP traffic through the tunnel.

- We added

MASQUERADErules to Gateway to enable proper SNAT. - We added some rules to Gateway's

filtertable to allow forwarding packets to / from Server.

These changes will not be preserved across reboots because the firewall and routing rules are not stored in the disk. Note that this change will be preserved:

- We added a routing table in

/etc/iproute2/rt_tables.

And these changes will be applied when wg0 is active:

- We specified

wg0's MTU in its configuration file. - We told WireGuard to add its routing rule in our

wireguardtable.

We actually don't really want to have WireGuard-related routing rules in effect at all times. Rather, we would like them to function also only when wg0 is active. Luckily, wg-quick allows us to run commands when an interface is brought up / down. Add this to the Interface section on Server:

# specify how to access the peer (through wg0)

PostUp = ip route add 192.168.160.0/24 dev wg0

PostUp = ip -6 route add fc00:0:0:160::/64 dev wg0

# PostDown is not needed because the rules will be automatically removed when wg0 goes down

# fix MSS issue

PostUp = iptables -t mangle -A POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

PostDown = iptables -t mangle -D POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

PostUp = ip6tables -t mangle -A POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

PostDown = ip6tables -t mangle -D POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

# let all outbound traffic to port 25 go through wireguard

PostUp = ip rule add ipproto tcp dport 25 iif br-mailcow lookup wireguard pref 2500

PostDown = ip rule del pref 2500

PostUp = ip -6 rule add ipproto tcp dport 25 iif br-mailcow lookup wireguard pref 2500

PostDown = ip -6 rule del pref 2500And on Gateway:

# allow forwarding to / from wg0

PostUp = iptables -A FORWARD -o wg0 -j ACCEPT

PostDown = iptables -D FORWARD -o wg0 -j ACCEPT

PostUp = iptables -A FORWARD -i wg0 -j ACCEPT

PostDown = iptables -D FORWARD -i wg0 -j ACCEPT

PostUp = ip6tables -A FORWARD -o wg0 -j ACCEPT

PostDown = ip6tables -D FORWARD -o wg0 -j ACCEPT

PostUp = ip6tables -A FORWARD -i wg0 -j ACCEPT

PostDown = ip6tables -D FORWARD -i wg0 -j ACCEPT

# do snat for forwarded traffic

PostUp = iptables -t nat -A POSTROUTING -o venet0 -j MASQUERADE

PostDown = iptables -t nat -D POSTROUTING -o venet0 -j MASQUERADE

PostUp = ip6tables -t nat -A POSTROUTING -o venet0 -j MASQUERADE

PostDown = ip6tables -t nat -D POSTROUTING -o venet0 -j MASQUERADEDon't forget to clear our manual changes before restarting WireGuard to test it out.

DNAT

The other purpose of this WireGuard setup is to publish network services through Gateway's public IP address. In a usual network setup, this is achieved through port forwarding. However, since we are not using a specialized router OS, we will have to implement port forwarding on our own. Luckily this isn't too complicated a concept. The technical term for port forwarding is DNAT, or Destination Network Address Translation. Contrary to SNAT which rewrites the source IP address, DNAT rewrites the destination IP address. Optionally, it can change the destination port as well.

To get started, let's consider an example where we want to forward port 443, the standard HTTPS port. While SNAT happens in the POSTROUTING phase, DNAT takes place during PREROUTING. Thus, a DNAT rule looks like this:

gateway# iptables -t nat -A PREROUTING ! -i wg0 -p tcp --dport 443 -j DNAT --to-destination 192.168.160.2

gateway# ip6tables -t nat -A PREROUTING ! -i wg0 -p tcp --dport 443 -j DNAT --to-destination fc00:0:0:160::2! -i wg0 is here to prevent redirecting traffic from Server back to Server. If this is not set, Server basically can no longer access any HTTPS website through the tunnel. Although we do not (yet) use this, we do not want to leave potential issues that in the future come back to bother us.

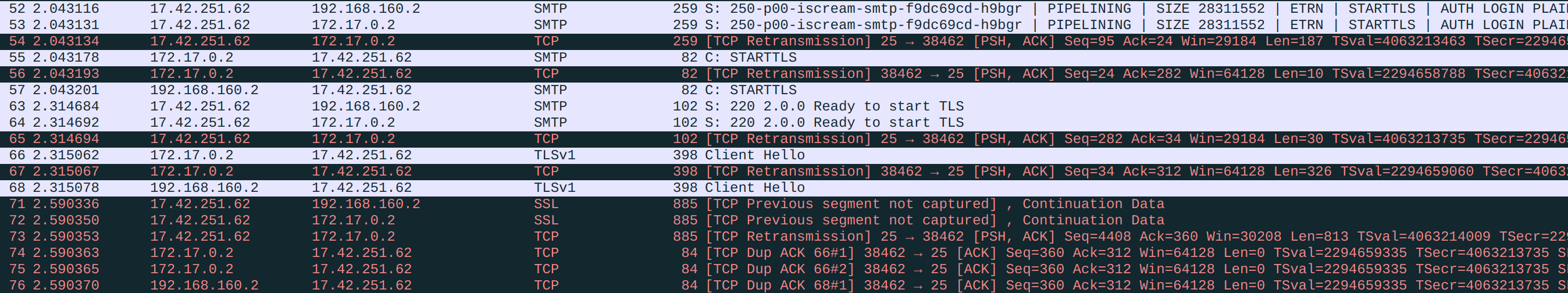

The Routing Dilemma: to SNAT or not to SNAT

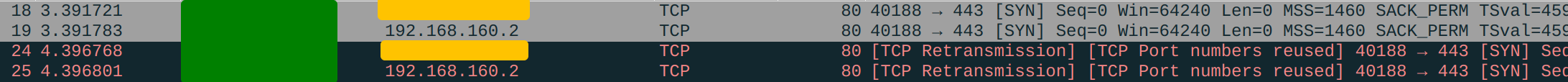

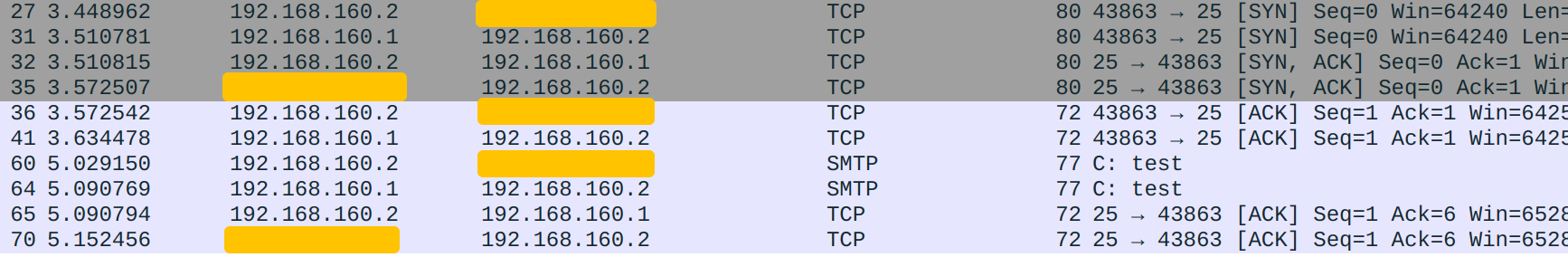

This again looks intuitive but does not work. You will notice that connections to TCP port 443 of Gateway's public IP fail. Again, let's use tcpdump to diagnose this issue. We will be using netcat instead of a real HTTPS server for simplicity. We first listen on port 443 on Server:

server# nc -l -v -p 443We then connect to Gateway's port 443 from an internet host (can just be your laptop). This is what we see from Server's packet log:

Redacted is my laptop's public IP address.

We can rest assured that our DNAT is already working correctly because the SYN successfully reached Server, but the SYN-ACK does not seem to be correctly delivered. The other side sent the same SYN again, while Server keeps replying with undelivered SYN-ACKs. Indeed, if we inspect the packet log on Gateway, there is no SYN-ACK sent back:

Why was the SYN-ACK not delivered? If we click on the SYN-ACK to inspect its details, we can notice this in the link layer:

Note that this packet has a source MAC address set, and if we list all our interfaces' MAC addresses, this one actually matches that of our physical network adapter. The link layer protocol is shown as "Linux cooked capture v2" because I used -i any - if I had listened on wg0, this packet would not be there at all. However, this packet will be captured if we listen on the physical interface.

Apparently, we have some routing issues here, leading to the SYN-ACK being routed to the wrong interface. To explain why this happens, we first need to know that by default, routing decisions are made solely according to the packet's destination IP address. A SYN-ACK is not in any sense different from a SYN sent from Server. Since we configured all packets but those targeted at TCP port 25 to go through the physical interface, the SYN-ACK will go there as well.

Adding SNAT to Gateway's wg0 interface would make the TCP connection succeed:

gateway# iptables -t nat -A POSTROUTING -o wg0 -j MASQUERADEBut this is what we intentionally avoided when we were configuring SNAT - we only did SNAT for the physical interface for a reason!

server# nc -l -v -p 443

listening on [any] 443 ...

connect to [192.168.160.2] from (UNKNOWN) [192.168.160.1] <some random port number>SNAT rewrites the source IP address, so Server will no longer know where the packet originally came from. This defeats our very purpose - to preserve source IP address information. Hence this is not what we'll do - we have to figure out a way to make the return packets go through wg0 without using SNAT on Gateway.

Making Routing Smarter with Conntrack

Let's simplify the situation by ignoring Docker first and testing on Server directly. Say we have a service that listens on 192.168.160.2. When we connect from 1.2.3.4 (a dummy internet host), the reply packet will have source IP 192.168.160.2 and destination IP 1.2.3.4. Even though routing by default only cares about the destination, we can add a policy to let the router look up the wireguard table for all packets originating from 192.168.160.2:

server# ip rule add from 192.168.160.2 lookup wireguardTo test it out, we can try to connect to an internet SMTP server using netcat:

server$ nc -s 192.168.160.2 smtp.gmail.com

220 smtp.gmail.com ESMTP y130-20020a817d88000000b0055a7c2375dfsm36356ywc.101 - gsmtpBy using -s, we tell netcat explicitly to use 192.168.160.2 as the source IP address. This means the connection was tunneled as expected because I configured the outbound SMTP routing policy for docker0 packets only.

While replies sent by the service listening on 192.168.160.2 will now use the wireguard table, connections initiated by Server do not specify 192.168.160.2 as the source IP address, so will not be routed through WG. This is because such packets, unlike responses sent from a listening socket, do not have the source IP field set until the routing decision has been made. Just before the packet leaves the system, the IP address of the interface responsible for delivering this packet will be assigned as its source IP address. This happens after the routing phase.

If we test at this point, we should already be able to receive incoming TCP connections on port 443, and we will see the real original source IP address! But we are not done yet because the use of Docker bridge networks complicates things. Docker's port forwarding mechanism (-p) basically turns Server into a gateway, doing DNAT on incoming packets and SNAT on outgoing packets.

Let's spin up a Docker container and test from there. This time, we will add a port forwarding from Server's port 443 to the container's port 443:

server# docker run -it -p 443:443 archlinux bashAnd we listen on port 443 inside the container before trying to connect from our laptop:

container# nc -l -v -p 443Oops - the connection cannot be established. It's time for some more Wireshark:

So the reply packets are indeed SNAT-ed and have the source ip 192.168.160.2 (see packet #17, #23, etc.) but they are never delivered correctly, resulting in endless retransmission. Our routing policy stopped working! Why?

Again, let me remind you that SNAT can only happen on the POSTROUTING chain. This means SNAT was done after the routing decision has been made. When the router saw the packet, it had the source IP 172.17.0.2, hence not matching our policy. The router routed it to the physical interface instead of the desired wg0. When Docker's SNAT rule rewrites the destination IP, everything is already too late.

It is impossible to make SNAT-ed packets go to the router again. Does it mean we are stranded?

Let's take a step back and write down what we want: For any packet sent, if it is a part of a connection originally sent to 192.168.160.2, route it through wg0.

The core problem is the ability to track a connection - and this is exactly what conntrack does! We have mentioned it several times, and now it's time to take a serious look at it. Remember the second part of SNAT? For SNAT to function, there has to be an accompanying DNAT process to rewrite the destination IP of reply packets. How on earth does Linux know what new destination IP to use?

Conntrack is a table in Netfilter. Each time a network connection is initiated, Linux adds an entry for it in the conntrack table. This table can be read from /proc/net/nf_conntrack:

server# cat /proc/net/nf_conntrack

*irrelevant entries omitted*

ipv4 2 tcp 6 55 SYN_RECV src=<my laptop's public IP> dst=192.168.160.2 sport=45610 dport=443 src=172.17.0.2 dst=<my laptop's public IP> sport=443 dport=45610 mark=0 zone=0 use=2This connection is in the SYN_RECV state, meaning that the SYN has been received and the system should be trying to reach back with a SYN-ACK, but no ACK has been seen. This corresponds to our observation. Following SYN_RECV is the connection's original source / destination IP and port information. When a subsequent SYN-ACK is seen by conntrack, the SYN-ACK's source / destination IP and port information is appended to the entry.

Netfilter can consult this table for subsequent packets in this TCP stream. SNAT is the most common use case. SNAT's reverse process picks the new source IP by looking up the original destination IP in the conntrack table.

We had wanted to utilize SNAT's conntrack "magic" to identify reply packets sent from the container before routing. As SNAT won't do us this favor, why not just implement its magic by ourselves?

Good news is that there happens to be an iptables extension for conntrack and it exposes the interface --ctorigdst. This is exactly what we want: to match packets with a specific original destination IP in the conntrack table:

server# iptables -t mangle -A PREROUTING -m conntrack --ctorigdst 192.168.160.2 --ctstate ESTABLISHED,RELATED -j MARK --set-mark 0xaThe PREROUTING chain in the mangle table is consulted before that in the nat table but after the packet goes through conntrack. It allows us to set firewall marks to packets before NAT-ing and routing them. Firewall marks, or fwmarks, are tags that Linux uses internally to track packets so that it can do specific things to these packets later. They are very useful when we want the router and the firewall to collaborate. Note that a fwmark is a local concept and never leaves the system because it is not written into the packet.

Our rule will identify all packets that belong to a connection originally sent to 192.168.160.2 or a connection related, and mark them with 0xa. Later, the mark will be seen by the router, and we can ask the router to route packets with this particular mark:

server# ip rule add fwmark 0xa lookup wireguardNow both the SYN and SYN-ACK should be delivered correctly, but we missed something in our rule, and that results in the ACK not being delivered properly. The ACK also goes through the PREROUTING chain in the mangle table, and it will match our rule, get the 0xa mark, then be thrown to the WireGuard tunnel. It is then discarded because its source IP - the client's public IP, is not in AllowedIPs.

We really only want this rule to work one-way, so we will modify it slightly by adding ! -d 192.168.0.2. Now inbound packets will no longer match this marking rule while outbound packets still match.

If you only use Docker containers to host network services, you can go ahead and remove the from 192.168.160.2 lookup wireguard policy because it is not at all useful... However, do keep it if you expect to host any service directly on Server, because locally generated packets do not go through PREROUTING (see the Netfilter diagram). However, they do go through OUTPUT. Thus, another solution would be to remove the policy and add the marking rule to OUTPUT.

NAT Loopback (NAT Reflection)

Our setup is now almost perfect, except for one small problem - a very well-known problem in networking. For various reasons, you might want Server to be able to access itself through its public IP address. While we can reach Server's network services by Gateway's public IP from the internet, reaching it from within the WireGuard internal network is another completely different situation. Here is a comparison (suppose Gateway has public IP 2.2.2.2):

| Step | From Internet Host 3.3.3.3 | From Server |

|---|---|---|

| 1 | 3.3.3.3 sends packet with source IP 3.3.3.3 and destination IP 2.2.2.2. | Server (connector) sends packet with destination IP 2.2.2.2. Packet is routed to wg0 according to some rule. |

| 2 | Server assigns source IP 192.168.160.2 before sending the packet through wg0 to 192.168.160.1 to forward. | |

| 3 | Packet arrives at venet0 on Gateway, with source IP 3.3.3.3 and destination IP 2.2.2.2. | Packet arrives at wg0 on Gateway, with source IP 192.168.160.2 and destination IP 2.2.2.2. |

| 4 | Packet matches our DNAT rule. Destination IP is changed to 192.168.160.2. | Packet does not match our DNAT rule because of the ! -i wg0. Destination IP is unchanged. |

| 5 | Because routing happens after DNAT, Gateway's router sees the modified destination IP address and forwards the packet. | Gateway's router sees that the packet was sent to Gateway, and delivers the packet. |

| 6 | Gateway sends the packet with source IP 3.3.3.3 and destination IP 192.168.160.2 through wg0. | Gateway does not have a socket listening on the specified port, so connection is refused. |

Apparently, we need to add another rule to match packets sent from Server as well, and apply DNAT accordingly:

gateway# iptables -t nat -A PREROUTING -i wg0 -s 192.168.160.0/24 -d 2.2.2.2 -p tcp --dport 443 -j DNAT --to-destination 192.168.160.2However, it alone will not fix our problem. Let's continue to see what happens if we had applied this rule:

| Step | From Internet Host 3.3.3.3 | From Server |

|---|---|---|

| 4 | Packet matches our DNAT rule. Destination IP is changed to 192.168.160.2. | Packet matches our DNAT rule. Destination IP is changed to 192.168.160.2. |

| 5 | Gateway's router forwards the packet. | Gateway's router forwards the packet. |

| 6 | Gateway sends the packet with source IP 3.3.3.3 and destination IP 192.168.160.2 through wg0. | Gateway sends the packet with source IP 192.168.160.2 and destination IP 192.168.160.2 through wg0. |

| 7 | Forwarded packet arrives at wg0 on Server, with source IP 3.3.3.3 and destination IP 192.168.160.2. | Forwarded packet arrives at wg0 on Server, with source IP 192.168.160.2 and destination IP 192.168.160.2. |

| 8 | Server (listener) replies with a packet with source IP 192.168.160.2 and destination IP 3.3.3.3. | Server (listener) replies with a packet with source IP 192.168.160.2 and destination IP 192.168.160.2. This is a packet for the local host, so it is delivered locally without going through WireGuard. |

| 9 | 3.3.3.3 gets a well-formed reply. | 192.168.160.2 (connector) gets an ill-formed reply with source IP 192.168.160.2 instead of the expected 2.2.2.2 (destination IP of the original packet). |

It might be a bit unclear how to fix this situation. The common fix is a very niche SNAT rule:

gateway# iptables -t nat -A POSTROUTING -o wg0 -s 192.168.160.0/24 -d 192.168.160.2 -p tcp --dport 443 -j MASQUERADEThis is a well-known trick called NAT loopback, a.k.a. NAT hairpinning or NAT reflection. You might notice that it actually goes back to SNAT-ing wg0's outbound traffic, but in a more restricted manner. For this reason, the solution is imperfect. However, we at least know that these connections come from the WireGuard LAN. If we assume the LAN is secure, this is much less of a security issue than SNAT-ing all outbound packets. I will accept the small flaw here. It might be possible to achieve perfect NAT loopback where source IP is preserved, but I did not dig into that. Please enlighten me if you know how to do that : )

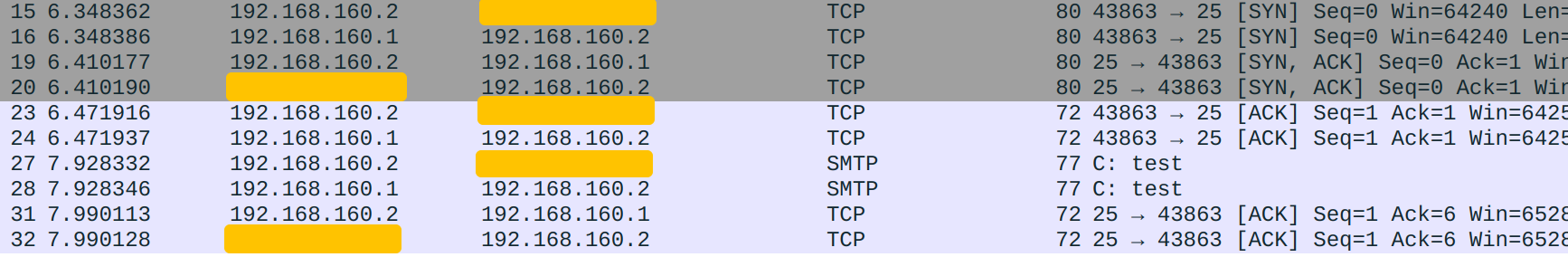

Now I will explain how the trick works. It is a bit convoluted because 192.168.160.2 is now both server and client. I will call it Server when it acts as the server and Client otherwise.

For convenience, I made some changes here that shall not impact the overall process:

- I did the testing with port 25 (instead of 443) because I set up WireGuard tunneling for it.

- I am using

nc -s 192.168.160.2to make sure that outbound packets are tunneled. It does not make sense if you do not specify this source IP and use a routing policy to tunnel them anyway, because you ultimately need a valid source IP for Server to reach back to you. This is either your real public IP or your WireGuard IP. If you use your real public IP, the situation falls back to connecting from the internet, so NAT loopback is not needed. If you connect from within a Docker container, Docker's SNAT will rewrite the source IP so you will see similar results.

- Client initiates connection to

2.2.2.2by sending SYN (Client Packet #27). - Client Packet #27 is tunneled through WireGuard because it matches our outbound SMTP policy. It reaches Gateway on

wg0and becomes Gateway Packet #15. - Gateway performs DNAT on the

PREROUTINGchain. Destination IP is changed to192.168.160.2. - Gateway performs SNAT (our new rule!) on the

POSTROUTINGchain. Source IP is changed to192.168.160.1. - Gateway Packet #15 becomes #16 and is forwarded.

- Gateway Packet #16 reaches Server on

wg0and becomes Server Packet #31. From Server's perspective, it just got a SYN from Client. - Server replies with Server Packet #32. #32's IP header information is #31's swapped because it is a reply to #31.

- Server Packet #32 is tunneled through WireGuard because it matches our conntrack rule.

- Server Packet #32 reaches Gateway and becomes Gateway Packet #19.

- From Gateway's perspective, it has done SNAT to the SYN (Gateway Packet #15 to #16) in step 4, and now it is getting a corresponding SYN-ACK, so it should do a DNAT to complete the SNAT. Gateway looks up the conntrack table and finds the original source IP

192.168.160.2. Hence it rewrites Packet #19's destination IP to192.168.160.2. Note that this DNAT is done at the conntrack point, notPREROUTING. - Just like SNAT rules need accompanying DNATs, DNATs need a SNATs in the reverse direction as well (think about why!) Because Gateway has done DNAT to the SYN in step 3, it now rewrites Packet #19's source IP to the original destination IP of Packet #15,

2.2.2.2. Similarly, this SNAT is done at the conntrack point. - Gateway Packet #19 becomes #20 and is forwarded. Because now the source IP is

2.2.2.2, our SNAT rule is not matched. - Gateway Packet #20 reaches Client and becomes Client Packet #35. From Client's perspective, it just got Server's SYN-ACK.

- Client sends an ACK to complete the TCP handshake. From Client's perspective, it is handshaking with

2.2.2.2. From Server's perspective, it is handshaking with192.168.160.1. - The ACK reaches Server the same way as the SYN does (Client #36 - Gateway #23 - Gateway #24 - Server #41).

- The TCP handshake is completed successfully. Subsequent packets follow the same pattern.

DNS Override

Rather than the convoluted NAT loopback, you might want to address the problem from a different perspective. If you access Gateway through a domain name instead of a plain IP, you can override the DNS record on Server to point to itself. Note that there are also some nuances here, and this solution also has imperfections. For example, it does not work when you map a Gateway port to a Server port with a different port number.

Conclusion & The Complete Configuration

Say congratulations to yourself! You reached the end of this note. Now you should have a working WireGuard virtual LAN that can tunnels traffic you specify and does port forwarding just like a real LAN. Hopefully you also learned something new about:

- Routing tables and policies

- TCP/IP

- IPv6

- DNAT and SNAT

- MTU

- NAT loopback

For your convenience, I am posting my complete WireGuard configuration files here. This is /etc/wireguard/wg0.conf on Gateway:

[Interface]

PrivateKey = <Gateway private key>

Address = 192.168.160.1, fc00:0:0:160::1

ListenPort = 51820

MTU = 1500

# allow necessary forwarding

PostUp = iptables -A FORWARD -o wg0 -j ACCEPT

PostDown = iptables -D FORWARD -o wg0 -j ACCEPT

PostUp = iptables -A FORWARD -i wg0 -j ACCEPT

PostDown = iptables -D FORWARD -i wg0 -j ACCEPT

PostUp = ip6tables -A FORWARD -o wg0 -j ACCEPT

PostDown = ip6tables -D FORWARD -o wg0 -j ACCEPT

PostUp = ip6tables -A FORWARD -i wg0 -j ACCEPT

PostDown = ip6tables -D FORWARD -i wg0 -j ACCEPT

# snat for wireguard traffic

PostUp = iptables -t nat -A POSTROUTING -o venet0 -j MASQUERADE

PostDown = iptables -t nat -D POSTROUTING -o venet0 -j MASQUERADE

PostUp = ip6tables -t nat -A POSTROUTING -o venet0 -j MASQUERADE

PostDown = ip6tables -t nat -D POSTROUTING -o venet0 -j MASQUERADE

# dnat

PostUp = iptables -t nat -A PREROUTING ! -i wg0 -p tcp --dport 443 -j DNAT --to-destination 192.168.160.2

PostDown = iptables -t nat -D PREROUTING ! -i wg0 -p tcp --dport 443 -j DNAT --to-destination 192.168.160.2

PostUp = iptables -t nat -A PREROUTING -i wg0 -s 192.168.160.0/24 -d <Gateway public IPv4> -p tcp --dport 443 -j DNAT --to-destination 192.168.160.2

PostDown = iptables -t nat -D PREROUTING -i wg0 -s 192.168.160.0/24 -d <Gateway public IPv4> -p tcp --dport 443 -j DNAT --to-destination 192.168.160.2

PostUp = ip6tables -t nat -A PREROUTING ! -i wg0 -p tcp --dport 443 -j DNAT --to-destination fc00:0:0:160::2

PostDown = ip6tables -t nat -D PREROUTING ! -i wg0 -p tcp --dport 443 -j DNAT --to-destination fc00:0:0:160::2

PostUp = ip6tables -t nat -A PREROUTING -i wg0 -s fc00:0:0:160::/64 -d <Gateway public IPv6> -p tcp --dport 443 -j DNAT --to-destination fc00:0:0:160::2

PostDown = ip6tables -t nat -D PREROUTING -i wg0 -s fc00:0:0:160::/64 -d <Gateway public IPv6> -p tcp --dport 443 -j DNAT --to-destination fc00:0:0:160::2

# nat loopback - common

PostUp = iptables -t nat -A POSTROUTING -o wg0 -s 192.168.160.0/24 -d 192.168.160.2 -p tcp --dport 443 -j MASQUERADE

PostDown = iptables -t nat -A POSTROUTING -o wg0 -s 192.168.160.0/24 -d 192.168.160.2 -p tcp --dport 443 -j MASQUERADE

PostUp = ip6tables -t nat -A POSTROUTING -o wg0 -s fc00:0:0:160::/64 -d fc00:0:0:160::2 -p tcp --dport 443 -j MASQUERADE

PostDown = ip6tables -t nat -A POSTROUTING -o wg0 -s fc00:0:0:160::/64 -d fc00:0:0:160::2 -p tcp --dport 443 -j MASQUERADE

[Peer]

PublicKey = <Server public key>

PresharedKey = <PSK>

AllowedIPs = 192.168.160.2/32, fc00:0:0:160::2/128This is /etc/wireguard/wg0.conf on Server:

[Interface]

PrivateKey = <Server private key>

Address = 192.168.160.2, fc00:0:0:160::2

Table = wireguard

MTU = 1500

# accessing the peer

PostUp = ip route add 192.168.160.0/24 dev wg0

PostUp = ip -6 route add fc00:0:0:160::/64 dev wg0

# fix MSS issue

PostUp = iptables -t mangle -A POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

PostDown = iptables -t mangle -D POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

PostUp = ip6tables -t mangle -A POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

PostDown = ip6tables -t mangle -D POSTROUTING -p tcp --tcp-flags SYN SYN -o wg0 -j TCPMSS --clamp-mss-to-pmtu

# routing outbound SMTP

PostUp = ip rule add ipproto tcp dport 25 iif br-mailcow lookup wireguard pref 2500

PostDown = ip rule del pref 2500

PostUp = ip -6 rule add ipproto tcp dport 25 iif br-mailcow lookup wireguard pref 2500

PostDown = ip -6 rule del pref 2500

# routing responses from host

PostUp = ip rule add from 192.168.160.2 table wireguard pref 2501

PostDown = ip rule del pref 2501

PostUp = ip -6 rule add from fc00:0:0:160::2 table wireguard pref 2501

PostDown = ip -6 rule del pref 2501

# routing responses from containers

PostUp = iptables -t mangle -A PREROUTING -m conntrack --ctorigdst 192.168.160.2 --ctstate ESTABLISHED,RELATED ! -d 192.168.160.2 -j MARK --set-mark 0xa

PostUp = ip rule add fwmark 0xa table wireguard pref 2502

PostDown = iptables -t mangle -D PREROUTING -m conntrack --ctorigdst 192.168.160.2 --ctstate ESTABLISHED,RELATED ! -d 192.168.160.2 -j MARK --set-mark 0xa

PostDown = ip rule del pref 2502

PostUp = ip6tables -t mangle -A PREROUTING -m conntrack --ctorigdst fc00:0:0:160::2 --ctstate ESTABLISHED,RELATED ! -d fc00:0:0:160::2 -j MARK --set-mark 0xa

PostUp = ip -6 rule add fwmark 0xa table wireguard pref 2502

PostDown = ip6tables -t mangle -D PREROUTING -m conntrack --ctorigdst fc00:0:0:160::2 --ctstate ESTABLISHED,RELATED ! -d fc00:0:0:160::2 -j MARK --set-mark 0xa

PostDown = ip -6 rule del pref 2502

[Peer]

PublicKey = <Gateway public key>

PresharedKey = <PSK>

AllowedIPs = 0.0.0.0/0, ::0/0

Endpoint = <Gateway public IP>:51820

PersistentKeepalive = 60If you landed here directly and took my configuration, please don't forget to:

- Enable TUN/TAP and configure BoringTUN if your Gateway is an OpenVZ VPS

- Enable forwarding both for IPv4 and IPv6 on Gateway

- Set the default policy of the

FORWARDchain in thefiltertable toDROP - Create the routing table

wireguardon Server - Tailor my config to your own situation (interface names, ports, etc.)

- Use some methods to persist these changes

- Say "thanks" to me : )

Bottom Line, and Some Rants

I always felt, and still feel, that applied Linux networking is difficult to get started with, mainly due to lack of good guidance. Most of the time I had to dig through small pieces of documentation scattered throughout the internet, trying to put them together to form a systematic overview of the network stack in Linux.

Some of you might say: Use ChatGPT! The short answer is: I did, but it didn't work at all. The long answer is: ChatGPT is very good at bulls**ting, and when it makes up a story, it justifies the story so well that you won't even notice that the story was purely false, until you reach a point where it contradicts itself. It is very easy to get pulled into false information if you try to learn things from ChatGPT. So, my advice is to stay away from AI, at least for now, if you want to learn some real Computer Science.

It is extremely frustrating when somebody interested in setting up their own network infrastructure has to at some point get stuck at some convoluted networking concepts, intricate and abstract tools, mysterious errors here and there, or lack of systematic documentation. I wish everyone has some choices other than spending days and weeks trying to figure these out alone, so I decided to write down what I have done, what I have learned and what I have to share with the rest of the internet. I sincerely hope that some day IT operations would be more beginner-friendly, and hosting one's own network infrastructure no longer means headache and mess.

为什么每篇文章的评论都全是 1 ?

刚才看了一下,这些评论都来自同一个IP,猜测是有人写了什么脚本一直在刷。这些评论已经删了,同时对对应IP做了屏蔽处理。谢谢提醒!

几个月前我也在研究WireGuard, 下载过 torvalds linux drivers-net_wireguard C原始碼, 查看了部分C原始碼, Jason Donenfeld 原始碼质量很高, 又下载了wireguard-go原始碼, 质量也很不错, wireguard-go沒有用到scatterlist DMA散点/点散 sg_init_table 这样的东西, 性能差距应该不小, 我将wireguard-go原始碼近乎完整的复刻到了Zig, Crystal进行实验 (包括Noise握手, 佇列, 所有的), TUN方面用到了一些Zig, Crystal開放原始碼, 以及C綁紮, 在macOS与Ubuntu测试后 perfect, 你可以查看一下尼德蘭埃因霍溫理工大學 PeterWu 2019年发表的<>, 全面详细介绍了WireGuard协议所有细节, 他的PDF让我受益匪浅, 加快了我的复刻, 研究的进程/过程

尼德蘭埃因霍溫理工大學 PeterWu 2019年发表的 Master Analysis of the WireGuard protocol PDF檔案

另外南韓人写的一篇文章也很透彻 (WireGuard VPN 해부 - slowbootkernelhacks blogspot) 我当时也参考了他写的文章, LINUX KERNEL HACKS BY Slowboot~ 이 블로그는 다양한 Embedded Board(ARM, MIPS, X86) 환경에서 Bootloader, Linux Kernel & Device Driver, Build system(Yocto, Buildroot, OpenWrt 등)을 심층 분석하고, 이를 공유할 목적으로 만들어졌다. 궁금한 사항이 있다면, 언제든 메모를 남겨주시기 바란다. Thanks to Linus Torvalds & All Linux Kernel Gurus !

以及日本人写的 次世代VPN WireGuardを改造して作ったL2VPN、L2 WireGuardの話をします

これは2021年7月10日に行われた カーネル/VM探検隊 online part3での発表動画です, 我当时阅读了好多文章, 当时研究的时候花了好多时间, 啊, 数不清了, 各国的, 日本的韩国队俄罗斯的 很多很多, 然后我又花了好多时间研究Tor的obfs4用到的Elligator密钥噪声, 还有一篇IEEE写到的对于Obfs4的SVM机器学习也很有趣